CHEP Trends: Multi-Threading May 24, 2012

Posted by gordonwatts in Analysis, CHEP, computers.6 comments

I find the topic of multi-threading fascinating. Moore’s law means that we now are heading to a multi-core world rather than just faster processors. But we’ve written all of our code as single threaded. So what do we do?

Before CHEP I was convinced that we needed an aggressive program to learn multithreaded programming techniques and to figure out how to re-implement many of our physics algorithms in that style. Now I’m not so sure – I don’t think we need to be nearly as aggressive.

Up to now we’ve solved things by just running multiple jobs – about one per core. That has worked out very well up to now, and scaling is very close to linear. Great! We’re done! Lets go home!

There are a number of efforts gong on right now to convert algorithms to be multi-threaded –rather than just running jobs in parallel. For example, re-implementing a track finding algorithm to run several threads of execution. This is hard work and takes a long time and “costs” a lot in terms of people’s time. Does it go faster? In the end, no. Or at least, not much faster than the parallel job! Certainly not enough to justify the effort, IMHO.

This was one take away from the conference this time that I’d not really appreciated previously. This is actually a huge relief: trying to make a reconstruction completely multi-threaded so that it efficiently uses all the cores in the machine is almost impossible.

But, wait. Hold your horses! Sadly, it doesn’t sound like it is quite that simple, at least in the long run. The problem is first the bandwidth between the CPU and the memory and second the cost of the memory. The second one is easy to talk about: each running instance of reconstruction needs something like 2 GB of memory. If you have 32 cores in one box, then that box needs 64 GB of main memory – or more including room for the OS.

The CPU I/O bandwidth is a bit tricky. The CPU has to access the event data to process it. Internally it does this by first asking its cache for the data and if the data hasn’t been cached, then it goes out to main memory to get it. The cache lookup is a very fast operation – perhaps one clock cycle or so. Accessing main memory is very slow, however, often taking many 10’s or more of cycles. In short, the CPU stalls while waiting. And if there isn’t other work to do, then the CPU really does sit idle, wasting time.

Normally, to get around this, you just make sure that the CPU is trying to do a number of different things at once. When the CPU can’t make progress on one instruction, it can do its best to make progress on another. But here is the problem: if it is trying to do too many different things, then it will be grabbing a lot of data from main memory. And the cache is of only finite size – so eventually it will fill up, and every memory request will displace something already in the cache. In short, the cache becomes useless and the CPU will grind to a halt.

The way around this is to try to make as many cores as possible work on the same data. So, for example, if you can make your tracking multithreaded, then the multiple threads will be working on the same set of tracking hits. Thus you have data for one event in memory being worked on by, say, 4 threads. In the other case, you have 4 separate jobs, all doing tracking on 4 different sets of tracking hits – which puts a much heavier load on the cache.

In retrospect the model in my head was all one or the other. You either ran a job for every core and did it single threaded, or you made one job use all the resources on your machine. Obviously, what we will move towards is a hybrid model. We will multi-thread those algorithms we can easily, and otherwise run a large number of jobs at once.

The key will be testing – to make sure something like this actually works faster. And you can imagine altering the scheduler in the OS to help you even (yikes!). Up to now we’ve not hit the memory-bandwidth limit. I think I saw a talk several years ago that said for a CMS reconstruction executable that occurred somewhere around 16 or so cores per CPU. So we still have a ways to go.

So, relaxed here in HEP. How about the real world? Their I see alarm bells going off – everyone is pushing multi-threading hard. Are we really different? And I think the answer is yes: there is one fundamental difference between them and us. We have a simple way to take advantage of multiple cores: run multiple jobs. In the real world many problems can’t do that – so the are not getting the benefit of the increasing number of cores unless they specifically do something about it. Now.

To, to conclude, some work moving forward on multithreaded re-implementation of algorithms is a good idea. As far as solving the above problem it is less useful to make the jet finding and track finding run at the same time, and more important to make the jet finding algorithm itself and the track finding algorithm itself multithreaded.

CHEP Trends: Libraries May 24, 2012

Posted by gordonwatts in Analysis, computers.add a comment

I’m attending CHEP – Computers in High Energy Physics – which is being hosted by New York University this year, in New York City. A lot of fun – most of my family is on the east coast so it is cool to hang out with my sister and her family.

CHEP has been one my favorite conference series. For a while I soured on it as the GRID hijacked it. Everything else – algorithms, virtualization, etc., is making a come back now and makes the conference much more balanced and more interesting, IMHO.

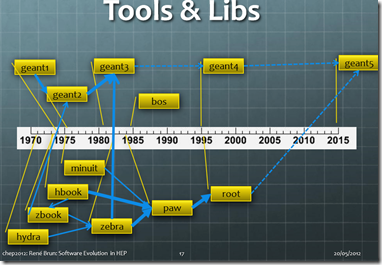

There were a few striking themes (no, one of them wasn’t me being a smart-a** – that has always been true). Rene Brun, one of the inventors of ROOT, gave a talk about the history of data analysis. Check out this slide:

A little while later Jeff Hammerbacher from Cloudera gave a talk (Cloudera bases its cloud computing business on Hadoop). Check this these slide:

These two slides show, I think, two very different approaches to software architecture. In Rene’s slide, note that all the libraries are coalescing into a small number of projects (i.e. ROOT and GEANT). As anyone who has used ROOT knows, it is a bit of a kitchen sink. The Cloudera platform, on the other hand, is a project built of many small libraries mashed together. Some of them are written in-house, others are written by other groups. All open source (as far as I could understand from the talk). This is the current development paradigm in the open source world: make lots of libraries that end-programing can put together like Lego blocks.

This trend in the web world is, I think, the result of at least two forces at place: the rapid release cycle and the agile programming approach. Both mean that you want to develop small bits of functionality in isolation, if possible, which can then be rapidly integrated into the end project. As a result, development can proceed a pace on both projects, independently. However, a powerful side-effect is it also enables someone from the outside to come along and quickly build up a new system with a few unique aspects – in short, innovate.

I’ve used the fruits of this in some of my projects: it is trivial to download an load a library into one of my projects and with almost no work I’ve got a major building block. HTML parsers, and combinator parsers are two that I’ve used recently that have meant I could ignore some major bits of plumbing, but still get a very robust solution.

Will software development in particle physics ever adopt this strategy? Should it? I’m still figuring that out.

The Square Wheel September 19, 2011

Posted by gordonwatts in Analysis, computers, LINQToTTree, ROOT.1 comment so far

Another geek post, I’m afraid. Last week I posted about some general difficulties I was having with doing analysis at the LHC. I actually got a fair amount of response – but all of it was people talking to me here at CERN rather than comments on the blog. So to summarize before moving on…

The biggest thing I got back was that as the corrections become well known, they get automated – so there is no need for this two step process I outlined before – running on MC and data, deriving a correction, and then running a third time to do the actual work, taking the correction into account. Rather, the ROOT files are centrally produced and the correction is applied there by the group. So the individual doesn’t have to worry. Sweet! That definitely improves life! However, the problem remains (i.e. when you are trying to derive a new correction).

I made three attempts before finally finding an analysis framework that worked (well, four if you count the traditional approach of C++, python, bash, and duct tape!). As you can tell – what I wanted was something that would correctly glue several phases of the analysis together. The example from last time:

- Correct the jet pT spectra in Monte Carlo (MC) to data

- Run on the full dataset and get the jetPt spectra.

- Do the same for MC

- Divide the two to get the ratio/correction.

- Run over the data and reweight my plot of jet variables by the above correction.

There are basically 4 steps in this: run on the data, run on the MC, divide the results, run on the data. Ding! This looks like workflow! My firs two attempts were based around this idea.

Workflow has a long tradition in particle physics. Many of our computing tasks require multiple steps and careful accounting every step of the way. We have lots of workflow systems that allow you to assemble a task from smaller tasks and keep careful track of everything that you do along the way. Indeed, all of our data processing and MC generation has been controlled by home-rolled workflow systems at ATLAS and DZERO. I would assume at every other experiment as well – it is the only way.

This approach appealed to me: I can build all the steps out of small tasks. One task that runs on data and one that runs on MC. And then add the “plot the jet pT” sub-task to each of those two, take the outputs, and then have a small generic tasks that would calculate a rate, and then another task that would weight the events and finally make the plots. Easy peasy!

So, first I tried Trident, something that came out of Microsoft Research. An open source system, it was designed to work with a number of scientists with large datasets that required frequent processing (NOAA related, I think). It had an attractive UW, and arbitrary data could be passed between the tasks, and the code interface for writing the tasks was pretty simple.

I managed to get some small things working with it – but there were two big things that caused it to fail. First, the way you pass around data was painful. I wanted to pass around a list of files to run on – and then from that I needed to pass around histograms. I wanted fine grained tasks that would manipulate histograms (dividing the plots) and the same time other tasks would be manipulating whole files (making the plots). Ugh! It was a lot of work just to do something simple! The second thing that killed it was that this particular tool – at the time – didn’t have sub-jobs. You couldn’t build a workflow, and then use it in other workflows. It was my fault that I missed that fact when I was choosing the tool.

So, I moved onto a second attempt. Since my biggest problem had been hooking everything up I decided to write my own. Instead of a GUI interface, I had an XML interface. And I did what is known as “coding-by-convention.” The idea is that I’d set a number of defaults into the design so that it “just worked” as long as the individual components obeyed the conventions. Since this was my own private framework there was no worry that this wouldn’t happen. The framework knew how to automatically combine similar histograms, for example, or if it was presented with multiple input datasets it knew how to combine those as well – something that would have required a another step in the Trident solution.

This solution went much better – I was able to do more than just do my demo – I tried moving beyond the reweighting example above and tried to do something more complex. And here is where, I think, I hit on the real reason that workflow doesn’t work for analysis (or at least for me): you are having to switch between various environments too often. The framework was written in XML. If I wanted a new task, then I had to write C++, or C# (depending). Then there was the code that ran the framework – I’d have to upgrade that periodically.

Really, all I wanted to do was make a stupid plot on two datasets, divide it, and then make a third plot using the first as a weight. Why did I need different languages and files to do that – why couldn’t I write that in a few lines??

Those of you who are active in this biz, of course, know the answer: two different environments. One set of code deals with looping over, possibly, terrabytes of data. That is the loop that makes the plot. Then you need some procedural code to do the histogram division. When that is done, you need another loop of code to do the final plots and reweighting. Take a step back. That is a lot of support code that I have to write! Loading up the MC and data files, running the loop over them, saving the resulting histogram. The number of lines I actually need to create the plot and put the data into the plot? Probably about 2 line or 3. The number of lines I need to actually run that job start to finished and make that plot? Closer to 150 or so, and in several files, some compiled and some interpreted. Too much ceremony for that one or two lines of code: 150 lines of boilerplate for 3 or so lines of the physics interesting code.

So, I needed something better. More on that next week.

BTW, the best visual analysis workflow I’ve seen (but not used) is something called VISPA. Had I known about it when I started the above project I would have gone to it first – it is cross platform, has batch manager, etc., integrated in, etc. (a fairly extensive list). Looking in retrospect it looks like it could support most of what I need to do. I say this only having done a quick scan of its documentation pages. I suspect I would have run into the same problem: having to move between different environments to code up something “simple”.

Reinventing the wheel September 10, 2011

Posted by gordonwatts in Analysis, computers, LINQToTTree, ROOT.add a comment

Last October (2010) my term came to and end running the ATLAS flavor-tagging group. It was time to get back to being a plot-making member of ATLAS. I don’t know how most people feel when they run a large group like this, but I start to feel separated from actually doing physics. You know a lot more about the physics, and your input affects a lot of people, but you are actually doing very little yourself.

But I had a problem. By the time I stepped down in order to even show a plot in ATLAS you had to apply multiple corrections: the z distribution of the vertex was incorrect, the transverse momentum spectrum of the jets in the Monte Carlo didn’t match, etc. Each of these corrections had to first be derived, and then applied before someone would believe your plot.

To make your one really great plot then, lets look at what you have to do:

- Run over the data to get the distributions of each thing you will be reweighting (jet pT, vertex z position, etc.).

- Run over the Monte Carlo samples to get the same thing

- Calculate the reweighting factors

- Apply the reweighting factors

- Make the plot you’d like to make.

If you are lucky then the various items you need to reweight are not correlated – so you can just run the one job on the Data and the one job on the Monte Carlo in steps one and two. Otherwise you’ll have to run multiple times. These jobs are either batch jobs that run on the GRID, or a local ROOT job you run on PROOF or something similar. The results of these jobs are typically small ROOT files.

In step three you have to author a small script that will extract the results from the two jobs in steps 1 and 2, and create the reweighting function. This is often no more difficult that dividing one histogram by another. One can do this at the start of the plotting job (the job you create for steps 4 and 5) or do ti at the command line and save the result in another ROOT file that serves as one of the inputs to the next step.

Steps 4 and 5 can normally be combined into one job. Take the results of step 3 and apply it as a weight to each event, and then plot whatever your variable of interest is, as a function of that weight. Save the result to another ROOT file and you are done!!

Whew!

I don’t know about you, but this looked scary to me. I had several big issues with this. First, the LHC has been running gang-busters. This means having to constantly re-run all these steps. I’d better not be doing it by hand, especially as things get more complex, because I’m going to forget a step, or accidentally reuse an old result. Next, I was going back to be teaching a pretty difficult course – which means I was going to be distracted. So whatever I did was going to have to be able to survive me not looking at it for a week and then coming back to it… and me still being able to understand what I did! Mostly, the way I normally approach something like the above was going to lead to a mess of scripts and programs, etc., all floating around.

It took me three tries to come up with something that seems to work. It has some difficulties, and isn’t perfect in a number of respects, but it feels a lot better than what I’ve had to do in the past. Next post I’ll talk about my two failed attempts (it will be a week, but I promise it will be there!). After that I’ll discuss my 2011 Christmas project which lead to what I’m using this year.

I’m curious – what do others do to solve this? Mess of scripts and programs? Some sort of work flow? Makefiles?? What?? What I’ve outlined above doesn’t seem scalable!

Source Code In ATLAS June 11, 2011

Posted by gordonwatts in ATLAS, computers.3 comments

I got asked in a comment what, really, was the size in lines of the source code that ATLAS uses. I have an imperfect answer. About 7 million total. This excludes comments in the code and blank lines in the code.

The break down is a bit under 4 million lines of C++ and almost 1.5 million lines of python – the two major programming languages used by ATLAS. Additionally, in those same C++ source files there are another about million blank lines and almost a million lines of comments. Python contains similar fractions.

There are 7 lines of LISP. Which was probably an accidental check-in. Once the build runs the # of lines of source code balloons almost a factor of 10 – but that is all generated code (and HTML documentation, actually) – so shouldn’t count in the official numbers.

This is imperfect because these are just the files that are built for the reconstruction program. This is the main program that takes the raw detector signals and coverts them into high level objects (electrons, muons, jets, etc.). There is another large body of code – the physics analysis code. That is the code that takes those high level objects and coverts them into actual interesting measurements – like a cross section, or a top quark mass, or a limit on your favorite SUSY model. That is not always in a source code repository, and is almost impossible to get an accounting of – but I would guess that it was about another x10 or so in size, based on experience in previous experiments.

So, umm… wow. That is big. But it isn’t quite as big as I thought! I mentioned in the last post talking about source control that I was worried about the size of the source and checking it out. However, Linux is apparently about 13.5 million lines of code, and uses one of these modern source control systems. So, I guess these things are up to the job…

Can’t It Be Easy? June 8, 2011

Posted by gordonwatts in ROOT.3 comments

Friday night. A truly spectacular day in Seattle. I had to take half of it off and was stuck out doors hanging out with Julia. Paula is on a plane to Finland. I’ve got a beer by my slide. A youtube video of a fire in a fireplace. Hey. I’m up for anything.

So, lets tackle a ROOT problem.

ROOT is weird. It has made it very easy to do very simple things. For example, want to draw a previously made histogram? Just double click and you’re done. Want to see what the data in one of your TTree’s looks like? Just double click on the leaf and it pops up! But, the second you want to do something harder… well, it is much harder. I’d say it was as hard to do something advanced as it was to do something intermediate in ROOT.

Plotting is an example.

Stacking the Plots

I have four plots, and I want to plot them on top of each other so I can compare them. If I do exactly what I learned how to do when I learned to plot one thing, I end up with the following:

Note all the lines on black, thin, and on top of each other. No legend. And that “stats” box in the upper right contains data relevant only to the first plot. The title strip is also only for the first plot. Grey background. Lousy font. It should probably have error bars but that is for a later time.

h1->Draw(); h2->Draw("SAME"); h3->Draw("SAME"); h4->Draw("SAME");

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }

So, everyone has to make plots like this. This should be “easy” to make it look good! I suspect with a simple solution 90% of the folks who use ROOT would be very happy!

So, someone must have thought of this, right? Turns out… yes. It is called THStack. Its interface is dirt simple:

THStack *s = new THStack(); s->Add(h1); s->Add(h2); s->Add(h3); s->Add(h4); s->Draw("nostack");

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }and we end up with the following:

THStack actually took care of a lot of stuff behind our backs.It matched up the axes, it made sure the max and min of the plot were correct, removed the stats box, and killed off the title. So this is a big win for us! Thanks to the ROOT team. But we are not done. I don’t know about you, but I can’t tell what is what on there!

Color

There are two options for telling the plots apart: color the lines or make them different patterns (dots, dashes, etc.). I am, fortunately, not color blind, and tend to choose color as my primary differentiator. ROOT defines a number of nice colors for you in the EColor enumeration… but you can’t really use it out of the box. Charitably, I would say the colors were designed to look good on the printed page – some of them are a disaster on a CRT, LCD, or beamer.

First, under no circumstances, under no situation, never. EVER. use the color kYellow. It is almost like using White on a White background. Just never do it. If you want a yellowish color, use kOrange as the color. At least, it looks yellow to me.

Second, try to avoid the default kGreen color. It is a flourecent green. On a white or grey background it tends to bleed into the surrounding colors or backgrounds. Instead, use a dark green color.

Do not use both kPink and kRed on the same plot – they are too close together. kCyan suffers the same problem as kGreen, so don’t use it. kSpring (yes, that is the name) is another color that is too bright a green to be useful – stay away if you can.

After playing around a bit I settled on these colors for my automatic color assignment: kBlack, kBlue, TColor::GetColroDark(kGreen), kRed, kViolet, kOrange, kMagenta. The TColor class has some nice palettes (right there in the docs, even). But it one thing it doesn’t have that it really should is what the constituents of EColor look like. These are the things that you are most likely to use.

Colors are tricky things. The thickness of the line can make a big difference, for example. The default 1 pixel line width isn’t enough in my opinion to really show off these colors (more on fixing that below).

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }

After applying the colors I end up with a plot that looks like the following:

A Legend and Title

So the plot is starting to look ok… at least, I can tell the difference between the various things. But darned if I can tell what each one is! We need a legend. Now, ROOT comes with the TLegend object. So, we could do all the work of cycling through the histograms and putting up the proper titles, etc. However, it turns out there is a very nice short-cut provided by the ROOT folks: TPad::BuildLegend. So, just using the code:

c1->BuildLegend();

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }where c1 is the pointer to the current TCanvas (the one most often used when you are running from the command line). See below for its effect. The automatic legend has some problems – mainly that it doesn’t automatically detect the best placement when drawing for a stack of histograms (left, right, up or down). One can think of a simple algorithm that would get this right most of the time. But that is for another day.

Next, I’d like to have a decent title up there, similar to what was there previously. This is also easy – we just pass it in when we create the stack of histograms.

THStack *s = new THStack("histstack", "WeightSV0");

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }

And we now have something that is at least scientifically serviceable:

One thing to note here – there are no x-axis labels. If you add an x-axis label to your plot the THStack doesn’t copy it over. I’d call that a bug, I suppose.

Background And Lines And Fonts

We are getting close to what I think the plot should look like out of the box. The final bit is basically pretty-printing. Note the very ugly white-on-grey around the lines in the Legend box. Or the font (it is pixelated, even when the plot is blown up). Or (to me, at least) the lines are too thin, etc. This plot wouldn’t even make it past first-base if you tried to submit it to a journal.

ROOT has a fairly nice system for dealing with this. All plots and other graphing functions tend to take their queues from a TStyle object. This defines the background, etc. The default set in ROOT is what you get above. HOWEVER… it looks like that is about to change with the new version of ROOT.

Now, a TStyle is funny. A style is applied when you draw the histograms… but it is also applied when it is created. So to really get it right you have to have the proper style applied both when you create and when you draw the histogram. In short: I have an awful time with TStyle! I’m left with the choice of either setting everything in code when I do the drawing, or applying a TStyle everywhere. I’ve gone with the latter. Here is my rootlogon.C file, which contains the TStyle definition. But even this isn’t perfect. After a bunch of work I basically gave up, I’m afraid, and I ended up with this (note the #@*@ title box still has that funny background):

Conclusion

So, if you’ve made it this far I’m impressed. As you can tell, getting ROOT to draw nice plots is not trivial. This should work out of the box (using the “SAME” option that I used in the first line we should get behavior that looks a lot like this last plot).

Finally, a word on object ownership. ROOT is written in C++, which means it is very easy to delete an object that is being referenced by some other bit of the system. As a result, code has to carefully keep track of who owns what and when. For example, if I don’t write out the Canvas that I’ve generated right away, sometimes my canvases somehow come out blank. This is because something has deleted the objects from under me (it was my program obviously, but I have no idea what did it). Reference counting would have been the right away to go, but ROOT was started too long ago. Perhaps it is time for someone to start again? ![]()

The code I used to make the above appears below. My actual code does more (for example, it will take the legend and automatically turn it into “lightJets”, “charmJets”, etc., instead of the full blown titles you see there. It is, obvously, not in C++, but the algorithm should be clear!

public static ROOTNET.Interface.NTCanvas PlotStacked(this ROOTNET.Interface.NTH1F[] histos, string canvasName, string canvasTitle, bool logy = false, bool normalize = false, bool colorize = true) { if (histos == null || histos.Length == 0) return null; var hToPlot = histos; /// /// If we have to normalize first, we need to normalize first! /// if (normalize) { hToPlot = (from h in hToPlot let clone = h.Clone() as ROOTNET.Interface.NTH1F select clone.Normalize()).ToArray(); } /// /// Reset the colors on these guys /// if (colorize) { var cloop = new ColorLoop(); foreach (var h in hToPlot) { h.LineColor = cloop.NextColor(); } } /// /// Use the nice ROOT utility THStack to make the plot /// var stack = new ROOTNET.NTHStack(canvasName + "StacK", canvasTitle); foreach (var h in hToPlot) { stack.Add(h); } /// /// Now do the plotting. Use the THStack to get all the axis stuff correct. /// If we are plotting a log plot, then make sure to set that first before /// calling it as it will use that information during its painting. /// var result = new ROOTNET.NTCanvas(canvasName, canvasTitle); result.FillColor = ROOTNET.NTStyle.gStyle.FrameFillColor; // This is not a sticky setting! if (logy) result.Logy = 1; stack.Draw("nostack"); /// /// And a legend! /// result.BuildLegend(); /// /// Return the canvas so it can be saved to the file (or whatever). /// return result; } /// <summary> /// Normalize this histo and return it. /// </summary> /// <param name="histo"></param> /// <returns></returns> public static ROOTNET.Interface.NTH1F Normalize(this ROOTNET.Interface.NTH1F histo, double toArea = 1.0) { histo.Scale(toArea / histo.Integral()); return histo; }

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }

Yes, We may Have Made a Mistake. June 3, 2011

Posted by gordonwatts in ATLAS, computers.9 comments

No, no. I’m not talking about this. A few months ago I wondered if, short of generating our own reality, ATLAS made a mistake. The discussion was over source control systems:

Subversion, Mercurial, and Git are all source code version control systems. When an experiment says we have 10 million lines of code – all that code is kept in one of these systems. The systems are fantastic – they can track exactly who made what modifications to any file under their control. It is how we keep anarchy from breaking out as >1000 people develop the source code that makes ATLAS (or any other large experiment) go.

Yes, another geeky post. Skip over it if you can’t stand this stuff.

ATLAS has switched some time ago from a system called cvs to svn. The two systems are very much a like: centralized, top-down control. Old school. However, the internet happened. And, more to the point, the Cathedral and the Bazaar happened. New source control systems have sprung up. In particular, Mercurial and git. These systems are distributed. Rather than asking for permission to make modifications to the software, you just point your source control client at the main source and hit copy. Then you can start making modifications to your hearts content. When you are done you let the owner of the repository know and tell them where your repository is – and they then copy your changes back! The key here is that you had your own copy of the repository – so you could make multiple modifications w/out asking the owner. Heck, you could even send your modifications to your friends for testing before asking the owner to copy them back.

That is why it is called distributed source control. Heck, you can even make modifications to the source at 30,000 feet (when no wifi is available).

When I wrote that first blog post I’d never tried anything but the old school source controls. I’ve not spent the last 5 months using Mercurial – one of the new style systems. And I’m sold. Frankly, I have no idea how you’d convert the 10 million+ lines of code in ATLAS to something like this, but if there is a sensible way to convert to git or mercurial then I’m completely in favor. Just about everything is easier with these tools… I’ve never done branch development in SVN, for example. But in Mercurial I use it all the time… because it just works. And I’m constantly flipping my development directory from one branch to another because it takes seconds – not minutes. And despite all of this I’ve only once had to deal with merge conflicts. If you look at SVN the wrong way it will give you merge conflicts.

All this said, I have no idea how git or Mercurial would scale. Clearly it isn’t reasonable to copy the repository for 10+ million lines of code onto your portable to develop one small package. But if we could figure that out, and if it integrated well into the ATLAS production builds, well, that would be fantastic.

If you are starting a small stand alone project and you can choose your source control system, I’d definitely recommend trying one of these two modern tools.

16,000 Physics Plots January 12, 2011

Posted by gordonwatts in ATLAS, CDF, CMS, computers, D0, DeepTalk, physics life, Pivot Physics Plots.4 comments

Google has 20% time. I have Christmas break. If you work at Google you are supposed to have 20% of your time to work on your own little side project rather than the work you are nominally supposed to be doing. Lots of little projects are started this way (I think GMail, for example, started this way).

Each Christmas break I tend to hack on some project that interests me – but is often not directly related to something that I’m working on. Usually by the end of the break the project is useful enough that I can start to get something out of it. I then steadily improve it over the next months as I figure out what I really wanted. Sometimes they never get used again after that initial hacking time (you know: fail often, and fail early). My deeptalk project came out of this, as did my ROOT.NET libraries. I’m not sure others have gotten a lot of use out of these projects, but I certainly have. The one I tackled this year has turned out to be a total disaster. Interesting, but still a disaster. This plot post is about the project I started a year ago. This was a fun one. Check this out:

Each of those little rectangles represents a plot released last year by DZERO, CDF, ATLAS, or CMS (the Tevatron and LHC general purpose collider experiments) as a preliminary result. That huge spike is July – 3600 plots (click to enlarge the image) - is everyone preparing for the ICHEP conference. In all the 4 experiments put out about 6000 preliminary plots last year.

I don’t know about you – but there is no way I can keep up with what the four experiments are doing – let alone the two I’m a member of! That is an awful lot of web pages to check – especially since the experiments, though modern, aren’t modern enough to be using something like an Atom/RSS feed! So my hack project was to write a massive web scraper and a Silverlight front-end to display it. The front-end is based on the Pivot project originally from MSR, which means you can really dig into the data.

For example, I can explode December by clicking on “December”:

and that brings up the two halves of December. Clicking in the same way on the second half of December I can see:

From that it looks like 4 notes were released – so we can organize things by notes that were released:

Note the two funny icons – those allow you to switch between a grid layout of the plots and a histogram layout. And after selecting that we see that it was actually 6 notes:

That left note is title “Z+Jets Inclusive Cross Section” – something I want to see more of, so I can select that to see all the plots at once for that note:

And say I want to look at one plot – I just click on it (or use my mouse scroll wheel) and I see:

I can actually zoom way into the plot if I wish using my mouse scroll wheel (or typical touch-screen gestures, or on the Mac the typical zoom gesture). Note the info-bar that shows up on the right hand side. That includes information about the plot (a caption, for example) as well as a link to the web page where it was pulled from. You can click on that link (see caveat below!) and bring up the web page. Even a link to a PDF note is there if the web scrapper could discover one.

Along the left hand side you’ll see a vertical bar (which I’ve rotated for display purposes here):

You can click on any of the years to get the plots from that year. Recent will give you the last 4 months of plots. Be default, this is where the viewer starts up – seems like a nice compromise between speed and breadth when you want to quickly check what has recently happened. The “FS” button (yeah, I’m not a user-interface guy) is short for “Full Screen”. I definitely recommend viewing this on a large monitor! “BK” and “FW” are like the back and forward buttons on your browser and enable you to undo a selection. The info bar on the left allows you do do some of this if you want too.

Want to play? Go to http://deeptalk.phys.washington.edu/ColliderPlots/… but first read the following. ![]() And feel free to leave suggestions! And let me know what you think about the idea behind this (and perhaps a better way to do this).

And feel free to leave suggestions! And let me know what you think about the idea behind this (and perhaps a better way to do this).

- Currently works only on Windows and a Mac. Linux will happen when Moonlight supports v4.0 of Silverlight. For Windows and the Mac you will have to have the Silverlight plug-in installed (if you are on Windows you almost certainly already have it).

- This thing needs a good network connection and a good CPU/GPU. There is some heavy graphics lifting that goes on (wait till you see the graphics animations – very cool). I can run it on my netbook, but it isn’t that great. And loading when my DSL line is not doing well can take upwards of a minute (when loading from a decent connection it takes about 10 seconds for the first load).

- You can’t open a link to a physics note or webpage unless you install this so it is running locally. This is a security feature (cross site scripting). The install is lightweight – just right click and select install (control-click on the Mac, if I remember correctly). And I’ve signed it with a certificate, so it won’t get messed up behind your back.

- The data is only as good as its source. Free-form web pages are a mess. I’ve done my best without investing an inordinate amount of time on the project. Keep that in mind when you find some data that makes no sense. Heck, this is open source, so feel free to contribute! Updating happens about once a day. If an experiment removes a plot from their web pages, then it will disappear from here as well at the next update.

- Only public web pages are scanned!!

- The biggest hole is the lack of published papers/plots. This is intentional because I would like to get them from arxiv. But the problem is that my scrapper isn’t intelligent enough when it hits a website – it grabs everything it needs all at once (don’t worry, the second time through it asks only for headers to see if anything has changed). As a result it is bound to set off arxiv’s robot sensor. And the thought of parsing TeX files for captions is just… not appealing. But this is the most obvious big hole that I would like to fix some point soon.

- This depends on public web pages. That means if an experiment changes its web pages or where they are located, all the plots will disappear from the display! I do my best to fix this as soon as I notice it. Fortunately, these are public facing web pages so this doesn’t happen very often!

Ok, now for some fun. Who has the most broken links on their public pages? CDF by a long shot. ![]() Who has the pages that are most machine readable? CMS and DZERO. But while they are that, the images have no captions (which makes searching the image database for text words less useful than it should be). ATLAS is a happy medium – their preliminary results are in a nice automatically produced grid that includes captions.

Who has the pages that are most machine readable? CMS and DZERO. But while they are that, the images have no captions (which makes searching the image database for text words less useful than it should be). ATLAS is a happy medium – their preliminary results are in a nice automatically produced grid that includes captions.

Did ATLAS Make a Big Mistake? December 16, 2010

Posted by gordonwatts in ATLAS, computers.11 comments

Ok. That is a sensationalistic headline. And, the answer is no. ATLAS is so big that, at least in this case, we can generate our own reality.

Check out this graphic, which I’ve pulled form a developer survey.

Ok, I apologize for this being hard to read. However, there is very little you need to read here. The first column is Windows users, the second Linux, and the third Mac. The key colors to pay attention to are red (Git), Green (Mercurial), and Purple (Subversion). This survey was completed just recently, has about 500 people responding. So it isn’t perfect… But…

Subversion, Mercurial, and Git are all source code version control systems. When an experiment says we have 10 million lines of code – all that code is kept in one of these systems. The systems are fantastic – they can track exactly who made what modifications to any file under their control. It is how we keep anarchy from breaking out as >1000 people develop the source code that makes ATLAS (or any other large experiment) go. Heck, I use Subversion for small little one-person projects as well. Once you get used to using them you wonder how you ever did without them.

One thing to note is that cvs, which is the grand-daddy of all version control systems and used to be it about 10 or 15 years ago doesn’t even show up. Experiments like CDF and DZERO, however, are still using them. The other thing to note… how small Subversion is. Particularly amongst Linux and Mac users. It is still fairly strong in Windows, though I suspect that is in part because there is absolutely amazing integration with the operating system which makes it very easy to use. And the extent to which it is used on Linux and the Mac may also be influenced by the people that took the survey – they used twitter to advertise it and those folks are probably a little more cutting edge on average than the rest of us.

Just a few years ago Subversion was huge – about the current size of Git. And there in lies the key to the title of this post. Sometime in March 2009 ATLAS decided to switch from cvs to Subversion. At the time it looked like Subversion was the future of source control. Ops!

No, ATLAS doesn’t really care for the most part. Subversion seems to be working well for it and its developers. And all the code for Subversion is open source, so it won’t be going away anytime. At any rate, ATLAS is big enough that it can support the project even if it is left as one of the only users of it. Still… this shift makes you wonder!

I’ve never used Git and Mercurial – both of which are a new type of distributed source control system. The idea is that instead of having a central repository where all your changes to your files are tracked, each person has their own. They can trade batches of changes back and forth with each other without contacting the central repository. It is a technique that is used in the increasingly high speed development industry (for things like Agile programming, I guess). Also, I’ve often heard the term “social coding” applied to Git as well, though it sounds like that may have to do more with the GitHub repository’s web page setup than the actual version control system. It is certainly true that anyone I talk to raves about GitHub and other things like that. While I might not get it yet, it is pretty clear that there is something to “get”.

I wonder if ATLAS will switch? Or, I should say, when it will switch! This experiment will go on 20 years. Wonder what version control system will be in ascendance in 10 years?

Update: Below, Dale included a link to a video of Linus talking about GIT (and trashing cvs and svn). Well worth a watch while eating lunch!

Tablet Musings January 20, 2010

Posted by gordonwatts in computers.5 comments

Rumor has it that Apple will be announcing a tablet computer next week. As a user of Windows tablet computers for over 5 years now (I’m on my third), I thought I should write something. Perhaps, I should say that this provides me with an excuse to write something… 🙂

I have always owned a convertible tablet pc. In one mode it is a normal laptop, and in the other mode the screen flips around and lays down flat – and I can write on it or otherwise interact with it as a tablet (mine is a >2 year old Lenovo X61T:

How much do I like this? Well… I will never own a different type of computer as long as they make something like this for a reasonable price. The reasons for this are many fold, but I think the big ones are the following.

First, I think with a pen. It is either my age or how my brain is wired, but I need to draw little pictures and arrows to help me organize my thoughts. I like taking a plot I have and writing on it – just the way I did in the old days with my log book, for example. Or if I have to review an analysis paper I mark the PDF up directly with the pen… it is sooo much easier and intuitive than marking up a PDF with Acrobat’s mark-up tools. Due to this feature I’ve almost completely stopped printing out papers.

Second, I lecture. I project my tablet on the very large overhead screen, and write my class lecture notes directly onto the tablet. Given my handwriting this is much easier to read than my chalk-board writing. As an added bonus I don’t get chalk dust all over my a** or dry-erase all over my hand. That last one can be quite an issue, actually, as I’m left handed!

Third is an application called OneNote written by Microsoft. It is part of the Office series. When it came out in 2005 I’d never seen anything like this. The closest that exists now is EverNote and it is still quite a bit different from OneNote. It has replaced the big heavy logbooks I used to carry around. I have close to 4 gigabytes of information in this notebook format now. And it is all searchable (including my handwriting).

As far as I’m concerned, this tablet technology totally revolutionized the way I use and interact with computers, so I’m a huge proponent of it (obviously).

That said, things aren’t perfect. For example, the X61T is both a laptop and a tablet. As a result it isn’t the best it could be for either. The tablet screen could be brighter in laptop mode, and it could have a track pad rather than a joystick. In tablet mode it is carrying along all that extra hardware to be a decent laptop, as a result it is too heavy in this mode. Don’t get me wrong – this laptop is over two years old and at the moment I don’t really feel any desire to buy a new model. Ideally, I’d love a slate computer – this is just the screen and the computer bit – so much smaller. Like a thick pad of paper. And also a light laptop to carry around. Then I could have the best of both worlds. However – this is too expensive. The combination would be well over $3K, and would weight more in my carry-on baggage when I went flying. Also, the X61T is old enough that the very high resolution screen (which makes PDFs look very good) doesn’t have touch. That would be a nice addition for browsing the web.

I have only rumors to go on for the Apple tablet. It sounds like it will have only a touch sensor on the screen – like a bit iPod Touch or iPhone, rather than the very high resolution pen digitizer required for writing. If that is the case this announcement won’t be very interesting to me. However, I’m very much in the minority here – I’ve not met many people who are as passionate about their tablets as I am – and there are a lot of people who own these machines (but many of them never use them in tablet mode at all). Indeed, I worry that a computer you can write on will become a smaller and smaller market due to things like the Apple tablet, and that will only drive the price of my kind of tablet up, eventually forcing me back to paper. 🙂