Christmas Project December 28, 2017

Posted by gordonwatts in Uncategorized.add a comment

Every Christmas I try to do some sort of project. Something new. Sometimes it turns into something real, and last for years. Sometimes it goes no where. Normally, I have an idea of what I’m going to attempt – usually it has been bugging me for months and I can’t wait till break to get it started. This year, I had none.

But, I arrived home at my parent’s house in New Jersey and there it was waiting for me. The house is old – more 200 yrs old – and the steam furnace had just been replaced. For those of you unfamiliar with this method of heating a house: it is noisy! The furnace boils water, and the steam is forced up through the pipes to cast iron radiators. The radiators hiss through valves as the air is forced up – an iconic sound from my childhood. Eventually, after traveling sometimes four floors, the super hot steam reaches the end of a radiator and the valve shuts off. The valves are cool – heat sensitive! The radiator, full of hot steam, then warms the room – and rather effectively.

The bane of this system, however, is that it can leak. And you have no idea where the leak is in the whole house! The only way you know: the furnace reservoir needs refilling too often. So… the problem: how to detect the reservoir needs refilling? Especially with this new modern furnace which can automatically refill its resevoir.

Me: Oh, look, there is a little LED that comes on when the automatic refilling system comes on! I can watch that! Dad: Oh, look, there is a little light that comes on when the water level is low. We can watch that.

Dad’s choice of tools: a wifi cam that is triggered by noise. Me: A Raspberry Pi 3, a photo-resistor, and a capacitor. Hahahaha. Game on!

What’s funny? Neither of us have detected a water-refill since we started this project. The first picture at the right you can see both of our devices – in the foreground taped to the gas input line is the CAM watching the water refill light through a mirror, and in the background (look for the yellow tape) is the Pi taped to the refill controller (and the capacitor and sensor hanging down looking at the LED on the bottom of the box).

What’s funny? Neither of us have detected a water-refill since we started this project. The first picture at the right you can see both of our devices – in the foreground taped to the gas input line is the CAM watching the water refill light through a mirror, and in the background (look for the yellow tape) is the Pi taped to the refill controller (and the capacitor and sensor hanging down looking at the LED on the bottom of the box).

I chose the Pi because I’ve used it once before – for a Spotify end-point. But never for anything that it is designed for. An Arduino is almost certainly better suited to this – but I wasn’t confident that I could get it up and running in the 3 days I had to make this (including time for ordering and shipping of all parts from Amazon). It was a lot of fun! And consumed a bunch of time. “Hey, where is Gordon? He needs to come for Christmas dinner!” “Wait, are you working on Christmas day?” – for once I could answer that last one with a honest no! Hahaha. ![]()

I learned a bunch:

- I had to solder! It has been a loooong time since I’ve done that. My first graduate student, whom I made learn how to solder before I let him graduate, would have laughed at how rusty my skills were!

- I was surprised to learn, at the start, that the Pi has no analog to digital converter. I stole a quick and dirty trick that lots of people have used to get around this problem: time how long it takes to charge a capacitor up with a photoresistor. This is probably the biggest source of noise in my system, but does for crude measurements.

- I got to write all my code in Python. Even interrupt handling (ok, no call backs, but still!)

- The Pi, by default, runs a full build of Linux. Also, python 3! I made full use of this – all my code is in python, and a bit in bash to help it get going. I used things like cron and pip – they were either there, or trivial to install. Really, for this project, I was never consious of the Pi being anything less than a full computer.

- At first I tried to write auto detection code – that would see any changes in the light levels and write them to a file… which was then served on a nginx simple webserver (seriously – that was about 2 lines of code to install). But the noise in the system plus the fact that we’ve not had a fill so I don’t know what my signal looks like yet… So, that code will have to be revised.

- In the end, I have to write a file with the raw data in it, and analyze that – at least, until I know what an actual signal looks like. So… how to get that data off the Pi – especially given that I can’t access it anymore now that I’ve left New Jersey? In the end I used some Python code to push the files to OneDrive. Other than figuring out how to deal with OAuth2, it was really easy (and I’m still not done fighting the authentication battle). What will happen if/when it fails? Well… I’ve recorded the commands my Dad will have to execute to get the new authentication files down there. Hopefully there isn’t going to be an expiration!

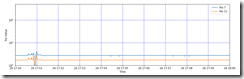

To analyze the raw data I’ve used a new tool I’ve recently learned at work: numpy and Jupyter notebooks. They allow me to produce a plot like this one. The dip near the left hand side of the plot is my Dad shining the flashlight at my sensors to see if I could actually see anything. The joker.

To analyze the raw data I’ve used a new tool I’ve recently learned at work: numpy and Jupyter notebooks. They allow me to produce a plot like this one. The dip near the left hand side of the plot is my Dad shining the flashlight at my sensors to see if I could actually see anything. The joker.

Pretty much the only thing I’d used before was Linux, and some very simple things with an older Raspberry Pi 2. If anyone is on the fence about this – I’d definately recommend trying it out. It is very easy and there are 1000’s of web pages with step by step instructions for most things you’ll want to do!

In Praise of 7” October 23, 2012

Posted by gordonwatts in Uncategorized.89 comments

I have lots of posts I’d like to write, but I have no time. I swear! Unless external events force my hand. In this case, I suppose I should be writing about the apparent crazy conviction of the geologists who failed to predict a deadly earth quake in Italy (really not possible), or science policy of the USA’s presidential candidates (wish I had a nice link).

But in this case, I want to talk about tech. I’ve been using a small, 7” tablet for over a year now. My first was the B&N Nook Tablet that was a gift about a year ago. At the time it was the best for the low price on the market – beating the Fire easily on tech grounds (longer battery life, lighter, thinner, and it had an SD slot for expanded memory). This year when everyone had announced their tablets I decided to upgrade to the Google Nexus 7.

My path to these and how I use them today is perhaps a little odd. It was completely motivated by the New Yorker and the Economist. I receive both magazines (thanks Dad, Uncle Steve!!) and love them. However, I can never keep up. When I go on long plane flights I would stash 10 issues or so of each in my bag and carry them across the Atlantic to CERN or where ever I was traveling. And often I would carry 9 issues back. You know how heavy & fat those are? Yes. 1st world problem.

The nook was fantastic in solving this. And if I was away for more than a week I could still get new issues. I soon installed a few other apps – like a PDF reader. Suddenly I was no longer printing out lecture notes for my class – I’d load them onto the nook and bring them with me that way. I could keep the full quarter of lecture notes with me at all times for when a student would ask me something! I try to keep up on blogs, and I managed to side-load gReader before B&N locked down the nook. I soon was putting comments in papers and talks then I had to review – very comfortable sitting on the couch with this thing!

As the new crop of tablets showed up I started looking for something that was faster. And perhaps with a more modern web browser. The main thing that drove this was viewing my class notes in the PDF viewer – sometimes a 5 second lag would interfere with my lecture when I was trying to look up something I’d written quickly. Amazon’s HD Fire and B&N’s new nooks were pretty disappointing, and so I went with the Nexus 7. The performance is great. But there was something else I’d not expected.

I know this is a duh for most people: but the importance of a well stocked app store. Wow. Now the Nexus 7 is very integrated into my workday. I used it constantly! My todo lists, some of my lab notebooks, reading and marking up papers and talks, all that is done on thins thing now. B&N’s app store is ok, but nothing like the Google app store – pretty much whatever I want is there, and with Google’s free $25 for the app store to spend with the purchase of the Next 7, I’ve not actually had to spend a cent… and having now owned this thing for about a month my app purchasing has pretty much dropped off to zero. Basically, all I take back and forth to work now are my reading glasses and the Nexus 7 (it was the same with the tablet). I put Dropbox on it, and… well, it is all there.

I have a few complaints. About the hardware – the 16 GB version is barely enough – because I want to be able to load it up with TV/Movies/DVD’s for my long flights. For everything else (music included) the 16 GB is plenty. I think I can connect a USB key to the device, but it was very nice having all that extra space in the nook tablet with its SD slot. The battery life is worse than the nook – it will make it only through two days of heavy use. The nook tablet would good 3 or 4 (but I didn’t use it nearly as much, so this might not be a fair comparison). This guy has NFC, which if I understand the tech right, should be so much better than Bluetooth – so I’m eager to try that out… when I get other devices that support it.

The rest of the complaints I have are due to software and thus can be easily updated. For example, Microsoft’s OneNote app for android doesn’t display handwriting. ![]() Many of my logbooks contain handwriting. Also the email app is really awful (seriously??) – though I should add it is serviceable for quick checks, triage, and reading. The only other mobile smart-device I own is a Windows Phone 7.5 – and the design and how the interface flows on android isn’t as nice or as integrated, but with Android 4.1 Google has done a great job. SkyDrive, which I like a lot better than dropbox, is on Android, but it doesn’t support in-place-editing (i.e. open a PDF file, annotate it, have it put back up to the cloud). With 7 GB free (25 because I was an early adopter), I’d drop dropbox if SkyDrive supported this on Android.

Many of my logbooks contain handwriting. Also the email app is really awful (seriously??) – though I should add it is serviceable for quick checks, triage, and reading. The only other mobile smart-device I own is a Windows Phone 7.5 – and the design and how the interface flows on android isn’t as nice or as integrated, but with Android 4.1 Google has done a great job. SkyDrive, which I like a lot better than dropbox, is on Android, but it doesn’t support in-place-editing (i.e. open a PDF file, annotate it, have it put back up to the cloud). With 7 GB free (25 because I was an early adopter), I’d drop dropbox if SkyDrive supported this on Android.

If you are still reading. I’m sure you know what triggered this post: Apple’s rumored 7” tablet that will be announced tomorrow. If you are locked into the Apple eco-system, and your work load looks anything like mine, you should get it. Otherwise, go with the Nexus 7” (at $250).

My wife has an older iPad, and I’ve played around with other iPad’s – for whatever reason, people don’t seem to carry them around to meetings, etc., very often. And I see people using them, but often not reading papers, etc. – but propped up on the stand and watching a movie. Also, the 10” form factor makes it very difficult to hold the tablet in your hand and thumb type: you need very big hands. For this sort of task, the 7” is perfect.

That isn’t to say that the 10” form factor isn’t great in itself. Microsoft with its W8 release is going to have a bunch of these tablets – and I can’t wait to buy one. Of course, for those of you who know me, my requirements are going to be a little weird: it must have an active digitizer. This is what allows you to write with a pen (as on my Tablet PC’s). Then I can finally get rid of the Tablet PC which is a compromise, and I can carry something optimized for each task: the 7” for quick work and reading, the 10” for a lab notebook, and a ultra-portable for the real work. Wait. Am I going to carry three now? Arrgh! What am I doing!?!?

We only let students do posters June 5, 2012

Posted by gordonwatts in Uncategorized.6 comments

I’m here at the PLHC conference in Vancouver, Canada (fantastic city, if you’ve not visited). I did a poster for the conference on some work I’ve done on combining the ATLAS b-tagging calibrations (the way their indico site is setup I have no idea how to link to the poster). I was sitting in the main meeting room, the large poster tube next to my seat, when this friend of mine walks by:

“Hey, brought one of your student’s posters?”

“Nope, did my own!”

“Wow. Really? We only let students do posters. I guess you’ve really fallen in the pecking order!”

Wow. It took me a little while to realize what got me upset about the exchange. So, first, it did hit a nerve. Those that know me know that I’ve been frustrated with the way the ATLAS experiment assigns talks – but this year they gave me a good talk. Friends of mine who are I think are deserving are also getting more talks now. So this is no longer really an issue. But comments like this still hit this nerve – you know, that general feeling of inadequacy that is left over from a traumatic high school experience or two. 🙂

But more to the point… are posters really such second class citizens? And if they are, should they remain as such?

I have always liked posters, and I have given many of them over my life. I like them because you end up in a detailed conversation with a number of people on the topic – something that almost never happens at a talk like the PLHC. In fact, my favorite thing to do is give a talk and a poster on the same topic. The talk then becomes an advertisement for the poster – a time when people that are very interested in my talk can come and talk in detail next to a large poster that lays out the details of the topic.

But more generally, my view of conferences as evolved over the past 5 years. I’ve been to many large conferences. Typically you get a set of plenary sessions with > 100 people in the audience, and then a string of parallel sessions. Each parallel talk is about 15-20 minutes long, and depending on the topic there can be quite a few people in the room. Only a few minutes are left for questions. The ICHEP series is a conference that symbolizes this.

Personally, I learn very little from this style of conference. Many of the topics and the analyses are quite complex. Too complex to really give an idea of the details in 15 or 20 slides. I personally am very interested in analysis details – not just the result. And getting to that level of detail requires – for me, at least – some back and forth. Especially if the topic is new I don’t even know what questions to ask! In short, these large conferences are fun, but I only get so much out of the talks. I learn much more from talking with the other attendees. And going to the poster sessions.

About 5 years ago I started getting invites to small workshops. These are usually about a week long, have about 20 to 40 people, and pick a specific topic. Dark Matter and Collider Physics. The Higgs. Something like that. There will be a few talks in the morning and maybe in the afternoon. Every talk that is given has at least the same amount of time set aside for discussion. Many times the workshop has some specific goals – better understanding of this particular theory systematic, or how to interpret the new results from the LHC, or how can the experiments get their results out in a more useful form for the theorist. The afternoons the group splits into working groups – where no level of detail is off-limits. I’ve been lucky enough to be invited to ones at UC Davis, Oregon, Maryland, and my own UW has been arranging a pretty nice series of them (see this recent workshop for links to previous ones). I can’t tell you how much I learn from these!

To me, posters are mini-versions of these workshops. You get 5 or 6 people standing around a poster discussing the details. A real transfer of knowledge. Here, at PLHC, there are 4 posters from ATLAS on b-tagging. We’ve all put them together in the poster room. If you walk by that end of the room you are trapped and surrounded by many of the experts – the people that actually did the work – and you can get almost any ATLAS b-tagging question answered. In a way that really isn’t, as far as I know, possible in many other public forums. PLHC is also doing some pretty cool stuff with posters. They have a jury that walks around and decides what poster is “best” and gives it an award. One thing the poster writer gets to do: give a talk at the plenary session. I recently attended CHEP – they did the same thing there. I’ve been told that CMS does something like this during their collaboration meetings too.

It is clear that conference organizers the world round are looking for more ways to get people attending the conference more involved in the posters that are being presented.

The attitude of my friend, however, is a fact of this field. Heck, even I have it. One of the things I look at in someone’s CV is how many talks they have given. I don’t look carefully at the posters they have listed. In general, this is a measure of what your peers think of you – have you done enough work in the collaboration to be given a nice talk? So this will remain with us. And those large conferences like ICHEP – nothing brings together more of our field all in one place than something like ICHEP. So they definitely still play a role.

Still the crass attitude “We only let students do posters” needs to end. And I think we still have more work to do getting details of our analysis and physics out to other members of our field, theorists and experimentalists.

Jumping the Gun April 4, 2011

Posted by gordonwatts in Uncategorized.16 comments

The internet has come to physics. Well, I guess CERN invented the internet, but, when it comes to science, our field usually moves at a reasonable pace – not too fast, but not (I hope) too slow. That is changing, however, and I fear some of the reactions in the field.

The first I heard about this phenomena was some results presented by the PAMELA experiment. The results were very interesting – perhaps indicating dark matter. The scientists showed a plot at a conference to show where they were, but explicitly didn’t put the plot into any public web page or paper to indicate they weren’t done analyzing the results or understanding their systematic errors. A few days later a paper showed up on arXiv (which I cannot locate) using a picture taken during the conference while the plot was being shown. Of course, the obvious thing to do here is: not talk about results before they are ready. I and most other people in the field looked at that and thought that these guys were getting a crash course in how to release results. The rule is: you don’t show anything until you are ready. You keep it hidden. You don’t talk about it. You don’t even acknowledge the existence of an analysis unless you are actually releasing results you are ready for the world to get its hands on and play with it as it may.

I’m sure something like that has happened since, but I’ve not really noticed it. But a paper out on the archives on April 1 (yes) seems to have done it again. This is a paper on a Z’ set of models that might explain a number of the small discrepancies at the Tevatron. A number of the results they reference are released and endorsed by the collaborations. But there is one source that isn’t – it is a thesis: Measurement of WW+WZ Production Cross Section and Study of the Dijet Mass Spectrum in the l-nu + Jets Final State at CDF (really big download). So here are a group of theorists, basically, announcing a CDF result to the world. That makes a bit uncomfortable. What is worse, however, is how they reference it:

In particular, the CDF collaboration has very recently reported the observation of a 3.3 excess in their distribution of events with a leptonically decaying W+- and a pair of jets [12].

I’ve not seen any paper released by the CDF collaboration yet – so that above statement is definitely not true. I’ve heard rumors that the result will soon be released, but they are rumors. And I have no idea what the actual plot will look like once it has gone through the full CDF review process. And neither do the theorists.

Large experiments like CDF, D0, ATLAS, CMS, etc. all have strict rules on what you are allowed to show. If I’m working on a new result and it hasn’t been approved, I am not allowed to even show my work to others in my department except under a very constrained set of circumstances*. The point is to prevent this sort of paper from happening. But a thesis, which was the source here, is a different matter. All universities that I know of demand that a thesis be public (as they should). And frequently a thesis will show work that is in progress from the experiment’s point of view – so they are a great way to look and see what is going on inside the experiment. However, now with search engines one can do exactly the above with relative ease.

There are all sorts of potential for over-reaction here.

On the experiment’s side they may want to put restrictions on what can be written in a thesis. This would be punishing the student for someone else’s actions, which we can’t allow.

On the other hand, there has to be a code-of-standards that is followed by people writing papers based on experimental results. If you can’t find the plot on the experiment’s public results pages then you can’t claim that the collaboration backs it. People scouring the theses for results (as you can bet there will be more now) should get a better understanding of the quality level of those results: sometimes they are exactly the plots that will show up in a paper, other times they are an early version of the result.

Personally, I’d be quite happy if results found in theses would stimulate conversation and models – and those could be published or submitted to the archive – but then one would hold off making experimental comparisons until the results were public by the collaboration.

The internet is here – and this information is now available much more quickly than before. There is much less hiding-thru-obscurity than there has been in the past, so we all have to adjust. ![]()

* Exceptions are made for things like job interviews, students presenting at national conventions, etc.

Update: CDF has released the paper…

200 Run 2 Papers from DZERO July 29, 2010

Posted by gordonwatts in Uncategorized.5 comments

Well, almost. We are at 185 published papers, 4 more accepted, and 7 more submitted. 19 of them have been cited more than 50 times, 5 more than 100, and 2 more than 250. So that makes 196 – so really close.

And this is just Run 2. Another 132 papers were published from Run 1 data. And none of this counts all the preliminary results we’ve put out between the papers, which often require almost as much work.

And the D0 collaboration is about to have its annual workshop – here in Marseille – so it seems like a fitting time to congratulate everyone on this milestone. It is fitting that this comes after a very successful ICHEP conference for the Tevatron experiments and D0.

So, what are those papers cited more than 250 times? One is the DZERO detector upgrade paper. Anytime someone writes about the DZERO detector (including DZERO) they reference this paper. The other one is the Direct limits on the Bs Oscillation Frequency. This paper caused some controversy when it came out as DZERO took a shortcut to beat CDF with this result (indeed, the DZERO detector and DAQ won’t allow it to make as good a measurement at CDF’s). It is good to see a paper like that getting so many citations!

There are of course several I have very fond memories of – like the Observation of Single Top – especially the almost two weeks of sleepless nights caused by Terry Wyatt’s rather innocuous statement during one of the final approval meetings “yeah, you should be able to improve that in an hour or two”.

DZERO has published so many papers that it got me into trouble. When I was up for tenure one thing the College Council required was a complete copy of all the papers I was an author on (the college council is the last group outside the department that reviews your tenure case carefully at UW). This means I had to download the PDF of every single paper from the APS and other journal sites. I then put them together into a giant PDF file. It was more than 2000 pages. I couldn’t print out the required two copies because I was in Europe in the time. So I emailed the PDF file to the department chair’s assistant with the simple text “please print out two copies for my tenure case” and promptly shut off the computer and poured my self a Pastis. Or three. Stitching together 2000 pages of PDF is a lot of work – I deserved it! Turns out that was the easy part.

A few hours later I was ready to crash and I thought I’d check my email to make sure everything was ok. Good news: the 2000+ PDF file didn’t bounce. Bad news was the string of increasingly frantic emails from first the administrative assistant and then from the department chair. They started with “Thanks! Printing now on my desktop printer!” That was a bad sign: that desktop printer is one of those small slow printers that prints out single sided and one sheet at a time – designed to print out one or two page letters so they can be signed and mailed. A few hours (!!) later “I’m at page 200 and it has already jammed a twice. How long is this?” and “I just checked the PDF, this is 2000 pages! You’re crazy!” and then the first email from the department chair that simply said “You might be buying us a new printer” and then “Scratch that, get me a new administrative assistant, I think she is about to quit” and finally to “ok, all done. But you had better send her (and me) a big box of chocolates and flowers.” As you might imagine my heart was beating faster and faster as I read these messages. I collapsed and slept.

It wasn’t over. The next morning there is an email broadcast to the department saying “We are aware that APS has shut off access to all of their journals for everyone at the University of Washington. This is not part of a journal budget cut; we will send more information as soon as we have it.” And then about 30 minutes later another email had been sent “The shut off was due to someone downloading a huge number of papers from UW and, it appears, from inside this department.” Great. I caused the APS to shut off the whole university. I had to get on the phone to someone at APS to explain myself before they would turn access back on.

I should point out that while DZERO got me into trouble there, I should also say that without the amazing success of the experiment I never would have gotten tenure. I’ve been hugely lucky to work with a lot of truly excellent physicists, first from Dave and Rich at Brown who were willing to take a chance on a fresh graduate student from CDF to Henry at UW. And over the course of these 200 papers I’ve been lucky enough to two work with 3 students – Andy, Thomas, and John, and Aran as a postdoc. It is with some pride that I note they are now all either doing better than I am or obviously on their way to doing better than I have.

On a more somber note, I asked Stefan, one of the co-spokes people, to send me a copy of the plaque down in the control room. As far as DZERO’s ability to mark passing time, there are 18 people that have helped this experiment and didn’t live to see the 200’th paper. I’m not going to list all 18 (though I’d be happy to post the plaque if people request it), but I’d like to mention several that I knew myself – Dan Owen (who can forget?!) who worked on the calorimeters and trigger, Andzrej Zieminski who knew my father and did a lot for our b-physics program, Rich Smith who worked so hard on our solenoid (and perhaps the recent dimuon asymmetry paper is in part thanks to!), and Harry Melanson who did a great deal of software and operations work.

I don’t think most of us are here – now or anytime in the past – because this was “just a job.” No one is paid enough to deal with the DZERO software framework – though I should note the LHC ones make ours look like a dream. We are here and contributed to whatever number of those 200 papers because we believe the world must understand the fundamental laws that govern it, because we like solving very difficult problems, we like playing with cool toys (like the DZERO detector), and because we like working with each other. And, on a personal note, it is good to know that I can still break the DAQ system (yes, that 10 GB log file that is forcing resets at 1:30 am and 7:30 am is my fault).

I bet that If you add the Run 1 papers and the Run 2 papers together we will break 400 before we are done!

The ICHEP Shuffle July 15, 2010

Posted by gordonwatts in Uncategorized.add a comment

Or maybe it should be called the ICHEP Crunch. We are one week out now. And from an experimentalist at-a-large-collider-experiment’s point-of-view, ICHEP is almost settled. The almost-final versions of all the plots are prepared, the supporting text sanitized of jargon is being fine-tuned, and the large collaborations are getting their last review in of the results. Heck, even some early results are staring to trickle out (CDF has released a two mass Higgs searchs: WH and ZH associated production (no excess!!) and a search for a standard model higgs decaying to taus (find all CDF results here), and DZERO has a search for a t’ quark (find all DZERO results here).

It feels a little bit like the ‘night before Christmas. It is the calm that is so spooky… Which is in direct contrast, of course, to what is going on outside the experiments in the papers and other blogs…

I’m fortunate enough to be on two of the experiments, ATLAS and DZERO, and on each experiment I’m participating in two sides of the shuffle. On DZERO I have a student working on getting one of the results ready. I bet that the “ICHEP deadline” was first talked about almost 6 months ago. That is about the time that the pressure started building for the folks doing the real work in the experiments. Ever since then each time someone wants to add something new, or spend the time to better understand and reduce a systematic error, they have to ask themselves “does this mean not releasing it for ICHEP?”*

In ATLAS I help run one of the groups producing results. This is management, not physics I’m doing there. The goal for people doing this task is to make sure that all the results are high enough quality to enter the review process. Sometimes this means convincing people to drop a plot, or add an extra plot. By the time someone like me gets heavily involved in the day-to-day of the analyses being prepared we are getting very close to the internal review process.

Back on DZERO I’m also helping out with one of the reviews of an analysis. Once the analyzers have put the finishing touches on their analysis, and the people running their physics group give the ok, the analysis is handed off to an internal review group. These people are meant to be independent reviewers of the analysis, and are supposed to go over it with a fine-toothed comb. Unlike external reviewers, they have access to all the internal information of the experiment. The review is based on an “analysis note” and supporting documentation. Just the note can be 100’s of pages long in case of a complex analysis (Like the Higgs searches from CDF I mentioned above). If no problems are found this review probably takes about a week. But a fresh set of eyes always turns up new problems. It is very intense time for the reviewers and the analyzers: the reviewers send questions, and the analyzers respond as fast as they can so that the review doesn’t get stuck and miss ICHEP. BTW, if you watch the experiments you’ll often see one or two or three analyses come out just after ICHEP – these are the ones where an issue was raised and couldn’t be addressed in time to make it for the conference.

Finally, with that done, the ICHEP shuffle enters its last phase: collaboration review. The now perfected analysis along with documentation suitable for everyone to read (which may be a journal paper draft) is thrown up on an internal web page and a message is broadcast to the complete collaboration (all 600 for DZERO or 3000 for ATLAS): “Our experiment is going to release this work – this week is the last chance to raise issues before it is made public!”

At the same time this review is on going everyone is putting together their ICHEP talks, running practice talks to make sure they are high quality, and answering questions that come in from the collaboration. A popular analysis can get 100’s of comments, for example (an unpopular one might get just a few). Each comment must be reviewed and answered by the analyzers and the answers cross-checked by the internal review. At the same time public web pages are being prepared with high quality versions of all the plots and links to the supporting text and documents.

And then ICHEP starts!

So… you might ask… what defines the length of the ICHEP Shuffle? Which is about 6 months? At the two ends of the year there are two large sets of conferences. ICHEP this year is in the summer, and in the winter is the Morriond series. I’ll bet you good money that I’ll see emails with the title “Morriond Analysis Planning” hitting my Inbox the week after ICHEP is over.

But, for us in these large collider experiments, ICHEP is the breather between the dances. A chance to relax, look around, see what everyone else is doing, get some new ideas, and maybe even explore Pairs!

[Cross-posted on the ICHEP blog]

Physics at the LHC – What did Guido mean!? June 10, 2010

Posted by gordonwatts in Uncategorized.3 comments

My summer has started. And the first stop is the conference Physics at the LHC 2010 conference (or pLHC as it has been known internally by the experiments).

As Barbara already mentioned, this conference, occurring this week in Hamburg, Germany, is a preview of results we might expect at ICHEP.

It is Wednesday morning and all the LHC experiments have big plenary talks discussing overviews of their physics current results. One of the CMS results has already been discussed by Tommaso. The results all show the first time the experiments are gingerly dipping their foot into the pool of physics. Ok, so it is the shallow end right now. Observation of many quark-onia bumps, but some real new measurements not done at the LHC’s new higher-than-ever energy, 7 TeV. But they also include the first observation of the W boson and the Z boson at the LHC. Now we’re talking!

One fascinating conversation occured right at the start of the meeting. It was a discussion that occurred during the introductory talk by Guido Tonelli, the spokesperson for CMS (video available). Sitting in the audience was Steven Myers from CERN, who runs the LHC (who also gave a talk on the present and near future of the LHC machine). Both Guido and Fabiola Gianotti (ATLAS spokesperson) pleaded with Steve to give them more luminosity. Guido went one step further and said that if they can give CMS enough luminosity in the next month they will measure the Standard Model for ICHEP.

What does that mean? Both experiments have observed the W boson now. Does that mean measuring its cross section? Or an observation of top quark production? Is there any reasonable plan for the LHC that would give it enough luminosity to observe top quark production!? Or was it just a flashy statement made to urge Steve to give the experiments more data? Does CMS have something up their sleeve? I guess time will tell… And both CMS and ATLAS will do push their data as far as they can.

Update: at the end of the CMS talk, slide 54, there is the statement “100 nb-1, 380 W and 35 Z”, and the speaker said “this we can be sure of.” So, that gives the scale of the CMS plans. And lets hope the LHC can actually give us that 100 np-1 of data!

Update: at the end of the CMS talk, slide 54, there is the statement “100 nb-1, 380 W and 35 Z”, and the speaker said “this we can be sure of.” So, that gives the scale of the CMS plans. And lets hope the LHC can actually give us that 100 np-1 of data!

Physics at the LHC – What did Guido mean!? June 10, 2010

Posted by gordonwatts in Uncategorized.add a comment

![Physik@LHC2010_Logo[1] Physik@LHC2010_Logo[1]](https://gordonwatts.files.wordpress.com/2010/06/physiklhc2010_logo1_thumb.gif?w=160&h=161) My summer has started. And the first stop is the conference Physics at the LHC 2010 conference (or pLHC as it has been known internally by the experiments).

My summer has started. And the first stop is the conference Physics at the LHC 2010 conference (or pLHC as it has been known internally by the experiments).

As Barbara already mentioned, this conference, occurring this week in Hamburg, Germany, is a preview of results we might expect at ICHEP.

It is Wednesday morning and all the LHC experiments have big plenary talks discussing overviews of their physics current results. One of the CMS results has already been discussed by Tommaso. The results all show the first time the experiments are gingerly dipping their foot into the pool of physics. Ok, so it is the shallow end right now. Observation of many quark-onia bumps, but some real new measurements not done at the LHC’s new higher-than-ever energy, 7 TeV. But they also include the first observation of the W boson and the Z boson at the LHC. Now we’re talking!

One fascinating conversation occured right at the start of the meeting. It was a discussion that occurred during the introductory talk by Guido Tonelli, the spokesperson for CMS (video available). Sitting in the audience was Steven Myers from CERN, who runs the LHC (who also gave a talk on the present and near future of the LHC machine). Both Guido and Fabiola Gianotti (ATLAS spokesperson) pleaded with Steve to give them more luminosity. Guido went one step further and said that if they can give CMS enough luminosity in the next month they will measure the Standard Model for ICHEP.

What does that mean? Both experiments have observed the W boson now. Does that mean measuring its cross section? Or an observation of top quark production? Is there any reasonable plan for the LHC that would give it enough luminosity to observe top quark production!? Or was it just a flashy statement made to urge Steve to give the experiments more data? Does CMS have something up their sleeve? I guess time will tell… And both CMS and ATLAS will do push their data as far as they can.

More Input Types for the Logbook March 20, 2010

Posted by gordonwatts in Uncategorized.5 comments

The comments to that last post pointed out there were a few other things people want to put in their logbooks. I’m biased by what I use of course, and the way I filtered the previous email reflected that, I’m afraid.

Pictures

Besides the comments I made last time, it was pointed out that integration with a cell phone is a definite plus. Modern cell phone cameras are already connected to wireless networks of one sort or another. It should allow you to add things into your logbook right away – similar to the Eye-Fi that I mentioned last time.

Actually, as far as I know, at least two programs already support this – Evernote and Onenote. In both cases you can view your logbooks on your phone, and insert notes, etc. I’ll talk more about the programs in my next post on this.

Code

This got left off my previous list for two reasons. First is that over the last 6 months the amount of actual code I’ve written has been much less than normal – so I’m not thinking about this aspect of things nearly as much.

The second reason is code repositories, like cvs and svn. At least in particle physics almost all the code we write is in one of these two repositories. For those that don’t have experience with code repositories: they allow you to track all the changes you make to your code, and to specify a point-in-time where everything works. You can go back to that point in time whenever you like and get the code exactly as it existed then no matter what state it has evolved to.

As you might guess from the way I worded that last bit there – it sounds a lot like a logbook to me. It can even keep track of annotations. And, unlike the way we think of most logbooks, it is collaborative – frequently many of us work together on the same bit of code coordinating our actions through the code repository. For most of that code I never am too interested in putting it in the logbook. Rather, I will often mention in a sentence the “thing” that I changed or improved.

But if you are using something like Mathematica, or MATLAB, etc., to do a calculation then it may be almost simpler to paste in the code than write it up in words. In some sense, this sounds to me like being able to add TeX easily to the blog – but being able to execute it as well.

And, while speaking of code, possu pointed out this is really useful if one can also have syntax coloring (keywords, variable names, etc., highlighted in different colors).

Moving Beyond PDF May 30, 2009

Posted by gordonwatts in Uncategorized.7 comments

Last time I used iPhone app’s as a lead in to writing about why PDF is no longer the best format to get papers around and read. The problem is that your-screen-is-about-the-size-of-a-page no longer holds. Here is what a single page from a typical paper in ArXiv looks like:

It looks like that no matter what your screen size is. So if you zoom in to a single column you’ll have to scroll down, then across and up and then down, etc. Yuck.

It looks like that no matter what your screen size is. So if you zoom in to a single column you’ll have to scroll down, then across and up and then down, etc. Yuck.

Here is an example of one program that does what I want – the New York Times reader*. Here it is reading an article on a “small” screen:

Now, for a “larger screen” (I’m simulating this by resizing the window, but you get the idea):

Now, for a “larger screen” (I’m simulating this by resizing the window, but you get the idea):

Note how the text columns changed width and resized – and now it added a picture as well (in the first screen size it was on page 2). This is what I want for the ArXiv papers. Automatic reflow depending on the screen size being looked at. On the iPhone you’d imagine that it would do only a single column. Perhaps you could render it in-line in a web page as a single column if you wanted – or just render it in columns. Your browser could become the display application and the document could reflow depending. How sweet would that be?

So how close are we to that? I don’t really know. HTML will work for most text. I suspect you could do figures pretty well. To first order if you didn’t need them to float it probably would be possible to do them in HTML. But for us in physics the killer are equations. There seems to be one possibility out there: MathML. For an example of what this looks like – see UT Austin physicist’s Jacques Distler’s blog Musings. As Distler points out, WebTex along with modifications to include MathML makes for a pretty decent solution. And the source for the ArXiv files is available too. Perhaps a first solution only takes a server in the clouds doing the conversion and acting as a front-end for CPU-weak devices like smart phones?

Bill Hill, who was one of the inventors of Clear Type (which makes text look so much better on a computer screen) writes an interesting blog where he talks about some of these issues (though not the Math aspect). As you can see from spending about 30 minutes there he claims everything is now in place, but someone has to put it all together…

I think we are at the brink of an explosion in different sized device displays. It is time our display rendering technology caught up with that!

* These images are from version 1 of the reader, which is Windows only. They have a version 2 out now, which is cross platform (on Windows, Apple, and Linux), but I like the Windows only interface better so I’ve not converted yet (but it does the reflow as I’m describing here). But if you own Linux or Apple and you want the offline experience of reading the paper in a much more comfortable environment than the web, I’d definitely recommend checking it out.