CHEP Trends: Multi-Threading May 24, 2012

Posted by gordonwatts in Analysis, CHEP, computers.6 comments

I find the topic of multi-threading fascinating. Moore’s law means that we now are heading to a multi-core world rather than just faster processors. But we’ve written all of our code as single threaded. So what do we do?

Before CHEP I was convinced that we needed an aggressive program to learn multithreaded programming techniques and to figure out how to re-implement many of our physics algorithms in that style. Now I’m not so sure – I don’t think we need to be nearly as aggressive.

Up to now we’ve solved things by just running multiple jobs – about one per core. That has worked out very well up to now, and scaling is very close to linear. Great! We’re done! Lets go home!

There are a number of efforts gong on right now to convert algorithms to be multi-threaded –rather than just running jobs in parallel. For example, re-implementing a track finding algorithm to run several threads of execution. This is hard work and takes a long time and “costs” a lot in terms of people’s time. Does it go faster? In the end, no. Or at least, not much faster than the parallel job! Certainly not enough to justify the effort, IMHO.

This was one take away from the conference this time that I’d not really appreciated previously. This is actually a huge relief: trying to make a reconstruction completely multi-threaded so that it efficiently uses all the cores in the machine is almost impossible.

But, wait. Hold your horses! Sadly, it doesn’t sound like it is quite that simple, at least in the long run. The problem is first the bandwidth between the CPU and the memory and second the cost of the memory. The second one is easy to talk about: each running instance of reconstruction needs something like 2 GB of memory. If you have 32 cores in one box, then that box needs 64 GB of main memory – or more including room for the OS.

The CPU I/O bandwidth is a bit tricky. The CPU has to access the event data to process it. Internally it does this by first asking its cache for the data and if the data hasn’t been cached, then it goes out to main memory to get it. The cache lookup is a very fast operation – perhaps one clock cycle or so. Accessing main memory is very slow, however, often taking many 10’s or more of cycles. In short, the CPU stalls while waiting. And if there isn’t other work to do, then the CPU really does sit idle, wasting time.

Normally, to get around this, you just make sure that the CPU is trying to do a number of different things at once. When the CPU can’t make progress on one instruction, it can do its best to make progress on another. But here is the problem: if it is trying to do too many different things, then it will be grabbing a lot of data from main memory. And the cache is of only finite size – so eventually it will fill up, and every memory request will displace something already in the cache. In short, the cache becomes useless and the CPU will grind to a halt.

The way around this is to try to make as many cores as possible work on the same data. So, for example, if you can make your tracking multithreaded, then the multiple threads will be working on the same set of tracking hits. Thus you have data for one event in memory being worked on by, say, 4 threads. In the other case, you have 4 separate jobs, all doing tracking on 4 different sets of tracking hits – which puts a much heavier load on the cache.

In retrospect the model in my head was all one or the other. You either ran a job for every core and did it single threaded, or you made one job use all the resources on your machine. Obviously, what we will move towards is a hybrid model. We will multi-thread those algorithms we can easily, and otherwise run a large number of jobs at once.

The key will be testing – to make sure something like this actually works faster. And you can imagine altering the scheduler in the OS to help you even (yikes!). Up to now we’ve not hit the memory-bandwidth limit. I think I saw a talk several years ago that said for a CMS reconstruction executable that occurred somewhere around 16 or so cores per CPU. So we still have a ways to go.

So, relaxed here in HEP. How about the real world? Their I see alarm bells going off – everyone is pushing multi-threading hard. Are we really different? And I think the answer is yes: there is one fundamental difference between them and us. We have a simple way to take advantage of multiple cores: run multiple jobs. In the real world many problems can’t do that – so the are not getting the benefit of the increasing number of cores unless they specifically do something about it. Now.

To, to conclude, some work moving forward on multithreaded re-implementation of algorithms is a good idea. As far as solving the above problem it is less useful to make the jet finding and track finding run at the same time, and more important to make the jet finding algorithm itself and the track finding algorithm itself multithreaded.

CHEP Trends: Libraries May 24, 2012

Posted by gordonwatts in Analysis, computers.add a comment

I’m attending CHEP – Computers in High Energy Physics – which is being hosted by New York University this year, in New York City. A lot of fun – most of my family is on the east coast so it is cool to hang out with my sister and her family.

CHEP has been one my favorite conference series. For a while I soured on it as the GRID hijacked it. Everything else – algorithms, virtualization, etc., is making a come back now and makes the conference much more balanced and more interesting, IMHO.

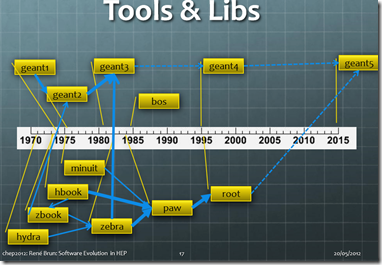

There were a few striking themes (no, one of them wasn’t me being a smart-a** – that has always been true). Rene Brun, one of the inventors of ROOT, gave a talk about the history of data analysis. Check out this slide:

A little while later Jeff Hammerbacher from Cloudera gave a talk (Cloudera bases its cloud computing business on Hadoop). Check this these slide:

These two slides show, I think, two very different approaches to software architecture. In Rene’s slide, note that all the libraries are coalescing into a small number of projects (i.e. ROOT and GEANT). As anyone who has used ROOT knows, it is a bit of a kitchen sink. The Cloudera platform, on the other hand, is a project built of many small libraries mashed together. Some of them are written in-house, others are written by other groups. All open source (as far as I could understand from the talk). This is the current development paradigm in the open source world: make lots of libraries that end-programing can put together like Lego blocks.

This trend in the web world is, I think, the result of at least two forces at place: the rapid release cycle and the agile programming approach. Both mean that you want to develop small bits of functionality in isolation, if possible, which can then be rapidly integrated into the end project. As a result, development can proceed a pace on both projects, independently. However, a powerful side-effect is it also enables someone from the outside to come along and quickly build up a new system with a few unique aspects – in short, innovate.

I’ve used the fruits of this in some of my projects: it is trivial to download an load a library into one of my projects and with almost no work I’ve got a major building block. HTML parsers, and combinator parsers are two that I’ve used recently that have meant I could ignore some major bits of plumbing, but still get a very robust solution.

Will software development in particle physics ever adopt this strategy? Should it? I’m still figuring that out.