Reproducibility… September 26, 2013

Posted by gordonwatts in Analysis, Data Preservation, Fermilab, reproducible.4 comments

I stumbled across an article on reproducibility recently, “Science is in a reproducibility crisis: How do we resolve it?”, with the following quotes which really caught me off guard:

Over the past few years, there has been a growing awareness that many experimentally established "facts" don’t seem to hold up to repeated investigation.

They made a reference to a 2010 alarmist New Yorker article, The Truth Wears Off (there is a link to a PDF of this article on the website, but I don’t know if it is legal, so I won’t link directly here).

Read that quote carefully: many. That means a lot. It would be all over! Searching on the internet, I stumbled on a Nature report. They looked carefully at a database of medical journal publications and retraction rates. Here is a image of the retraction rates the found as a function of time:

First, watch it for the axes here – multiply the numbers on the left by 10 to the 5th (100000), and numbers on the right by 10 to the –2 (0.01). IN short, the peak rate is 0.01%. This is a tiny number. And, as the report points out, there are two ways to interpret the results:

This conclusion, of course, can have two interpretations, each with very different implications for the state of science. The first interpretation implies that increasing competition in science and the pressure to publish is pushing scientists to produce flawed manuscripts at a higher rate, which means that scientific integrity is indeed in decline. The second interpretation is more positive: it suggests that flawed manuscripts are identified more successfully, which means that the self-correction of science is improving.

The truth is probably a mixture of the two. But this rate is still very very small!

The reason I harp on this is because I’m currently involved in a project that contains reproducibility as one of its possible uses: preserving the data of the DZERO experiment, one of the two general purpose detectors on the now-defunct Tevatron accelerator. Through this I’ve come to appreciate exactly how difficult and potentially expensive this process might be. Especially in my field.

Lets take a very simple example. Say you use Excel to process data for a paper you are writing. The final number comes from this spreadsheet and is copied into the conclusions paragraph of your paper. So you can now upload your excel spreadsheet to the journal along with the draft of the paper. The journal archives it forever. If someone is puzzled by your result, they can go to the journal and download the spreadsheet and see exactly what you did (aka modern economics papers). Win!

Only wait. What if the numbers that you typed into your spreadsheet came from some calculations you ran. Ok. You need to include that. And the inputs to the calculations. And so on and so on. For a medical study you would presumably have to upload the anonymous medical records of each patient, and then everything from there to the conclusion about a drug’s safety or efficacy. Uploading raw data from my field is not feasible – it is petabytes in size. This is all ad-hoc – the tools we use do not track the data as they flow through them.

As an early prof I was involved in a study that was trying to replicate and extend a result from a prior experiment. We couldn’t. The group from the other experiment was forced to resurrect code on a dead operating system, and figure out what they did – reproduce it – so they could ask our questions. The process took almost a year. In the end we found one error in that original paper – but the biggest change was just that modern tools were better had a better model of physics and that was the main reason we could not replicate their results. It delayed the publication of our paper by a long time.

So, clearly, it is useful to have reproducibility. Errors are made. Bias gets involved even with the best of intentions. Sometimes fraud is involved. But these negatives have to be balanced against the cost of making all the analyses reproducible. Our tools just aren’t there yet and it will be both expensive and time consuming to upgrade them. Do we do that? Or measure a new number, rule out a new effect, test a new drug?

Given the rates above, I’d be inclined to select the latter. And have a process of evolution of the tools. No crisis.

CHEP Trends: Multi-Threading May 24, 2012

Posted by gordonwatts in Analysis, CHEP, computers.6 comments

I find the topic of multi-threading fascinating. Moore’s law means that we now are heading to a multi-core world rather than just faster processors. But we’ve written all of our code as single threaded. So what do we do?

Before CHEP I was convinced that we needed an aggressive program to learn multithreaded programming techniques and to figure out how to re-implement many of our physics algorithms in that style. Now I’m not so sure – I don’t think we need to be nearly as aggressive.

Up to now we’ve solved things by just running multiple jobs – about one per core. That has worked out very well up to now, and scaling is very close to linear. Great! We’re done! Lets go home!

There are a number of efforts gong on right now to convert algorithms to be multi-threaded –rather than just running jobs in parallel. For example, re-implementing a track finding algorithm to run several threads of execution. This is hard work and takes a long time and “costs” a lot in terms of people’s time. Does it go faster? In the end, no. Or at least, not much faster than the parallel job! Certainly not enough to justify the effort, IMHO.

This was one take away from the conference this time that I’d not really appreciated previously. This is actually a huge relief: trying to make a reconstruction completely multi-threaded so that it efficiently uses all the cores in the machine is almost impossible.

But, wait. Hold your horses! Sadly, it doesn’t sound like it is quite that simple, at least in the long run. The problem is first the bandwidth between the CPU and the memory and second the cost of the memory. The second one is easy to talk about: each running instance of reconstruction needs something like 2 GB of memory. If you have 32 cores in one box, then that box needs 64 GB of main memory – or more including room for the OS.

The CPU I/O bandwidth is a bit tricky. The CPU has to access the event data to process it. Internally it does this by first asking its cache for the data and if the data hasn’t been cached, then it goes out to main memory to get it. The cache lookup is a very fast operation – perhaps one clock cycle or so. Accessing main memory is very slow, however, often taking many 10’s or more of cycles. In short, the CPU stalls while waiting. And if there isn’t other work to do, then the CPU really does sit idle, wasting time.

Normally, to get around this, you just make sure that the CPU is trying to do a number of different things at once. When the CPU can’t make progress on one instruction, it can do its best to make progress on another. But here is the problem: if it is trying to do too many different things, then it will be grabbing a lot of data from main memory. And the cache is of only finite size – so eventually it will fill up, and every memory request will displace something already in the cache. In short, the cache becomes useless and the CPU will grind to a halt.

The way around this is to try to make as many cores as possible work on the same data. So, for example, if you can make your tracking multithreaded, then the multiple threads will be working on the same set of tracking hits. Thus you have data for one event in memory being worked on by, say, 4 threads. In the other case, you have 4 separate jobs, all doing tracking on 4 different sets of tracking hits – which puts a much heavier load on the cache.

In retrospect the model in my head was all one or the other. You either ran a job for every core and did it single threaded, or you made one job use all the resources on your machine. Obviously, what we will move towards is a hybrid model. We will multi-thread those algorithms we can easily, and otherwise run a large number of jobs at once.

The key will be testing – to make sure something like this actually works faster. And you can imagine altering the scheduler in the OS to help you even (yikes!). Up to now we’ve not hit the memory-bandwidth limit. I think I saw a talk several years ago that said for a CMS reconstruction executable that occurred somewhere around 16 or so cores per CPU. So we still have a ways to go.

So, relaxed here in HEP. How about the real world? Their I see alarm bells going off – everyone is pushing multi-threading hard. Are we really different? And I think the answer is yes: there is one fundamental difference between them and us. We have a simple way to take advantage of multiple cores: run multiple jobs. In the real world many problems can’t do that – so the are not getting the benefit of the increasing number of cores unless they specifically do something about it. Now.

To, to conclude, some work moving forward on multithreaded re-implementation of algorithms is a good idea. As far as solving the above problem it is less useful to make the jet finding and track finding run at the same time, and more important to make the jet finding algorithm itself and the track finding algorithm itself multithreaded.

CHEP Trends: Libraries May 24, 2012

Posted by gordonwatts in Analysis, computers.add a comment

I’m attending CHEP – Computers in High Energy Physics – which is being hosted by New York University this year, in New York City. A lot of fun – most of my family is on the east coast so it is cool to hang out with my sister and her family.

CHEP has been one my favorite conference series. For a while I soured on it as the GRID hijacked it. Everything else – algorithms, virtualization, etc., is making a come back now and makes the conference much more balanced and more interesting, IMHO.

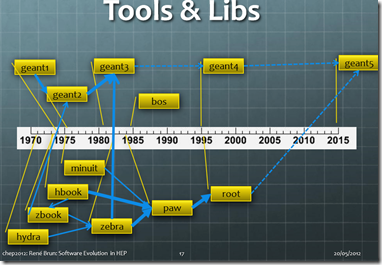

There were a few striking themes (no, one of them wasn’t me being a smart-a** – that has always been true). Rene Brun, one of the inventors of ROOT, gave a talk about the history of data analysis. Check out this slide:

A little while later Jeff Hammerbacher from Cloudera gave a talk (Cloudera bases its cloud computing business on Hadoop). Check this these slide:

These two slides show, I think, two very different approaches to software architecture. In Rene’s slide, note that all the libraries are coalescing into a small number of projects (i.e. ROOT and GEANT). As anyone who has used ROOT knows, it is a bit of a kitchen sink. The Cloudera platform, on the other hand, is a project built of many small libraries mashed together. Some of them are written in-house, others are written by other groups. All open source (as far as I could understand from the talk). This is the current development paradigm in the open source world: make lots of libraries that end-programing can put together like Lego blocks.

This trend in the web world is, I think, the result of at least two forces at place: the rapid release cycle and the agile programming approach. Both mean that you want to develop small bits of functionality in isolation, if possible, which can then be rapidly integrated into the end project. As a result, development can proceed a pace on both projects, independently. However, a powerful side-effect is it also enables someone from the outside to come along and quickly build up a new system with a few unique aspects – in short, innovate.

I’ve used the fruits of this in some of my projects: it is trivial to download an load a library into one of my projects and with almost no work I’ve got a major building block. HTML parsers, and combinator parsers are two that I’ve used recently that have meant I could ignore some major bits of plumbing, but still get a very robust solution.

Will software development in particle physics ever adopt this strategy? Should it? I’m still figuring that out.

The Square Wheel September 19, 2011

Posted by gordonwatts in Analysis, computers, LINQToTTree, ROOT.1 comment so far

Another geek post, I’m afraid. Last week I posted about some general difficulties I was having with doing analysis at the LHC. I actually got a fair amount of response – but all of it was people talking to me here at CERN rather than comments on the blog. So to summarize before moving on…

The biggest thing I got back was that as the corrections become well known, they get automated – so there is no need for this two step process I outlined before – running on MC and data, deriving a correction, and then running a third time to do the actual work, taking the correction into account. Rather, the ROOT files are centrally produced and the correction is applied there by the group. So the individual doesn’t have to worry. Sweet! That definitely improves life! However, the problem remains (i.e. when you are trying to derive a new correction).

I made three attempts before finally finding an analysis framework that worked (well, four if you count the traditional approach of C++, python, bash, and duct tape!). As you can tell – what I wanted was something that would correctly glue several phases of the analysis together. The example from last time:

- Correct the jet pT spectra in Monte Carlo (MC) to data

- Run on the full dataset and get the jetPt spectra.

- Do the same for MC

- Divide the two to get the ratio/correction.

- Run over the data and reweight my plot of jet variables by the above correction.

There are basically 4 steps in this: run on the data, run on the MC, divide the results, run on the data. Ding! This looks like workflow! My firs two attempts were based around this idea.

Workflow has a long tradition in particle physics. Many of our computing tasks require multiple steps and careful accounting every step of the way. We have lots of workflow systems that allow you to assemble a task from smaller tasks and keep careful track of everything that you do along the way. Indeed, all of our data processing and MC generation has been controlled by home-rolled workflow systems at ATLAS and DZERO. I would assume at every other experiment as well – it is the only way.

This approach appealed to me: I can build all the steps out of small tasks. One task that runs on data and one that runs on MC. And then add the “plot the jet pT” sub-task to each of those two, take the outputs, and then have a small generic tasks that would calculate a rate, and then another task that would weight the events and finally make the plots. Easy peasy!

So, first I tried Trident, something that came out of Microsoft Research. An open source system, it was designed to work with a number of scientists with large datasets that required frequent processing (NOAA related, I think). It had an attractive UW, and arbitrary data could be passed between the tasks, and the code interface for writing the tasks was pretty simple.

I managed to get some small things working with it – but there were two big things that caused it to fail. First, the way you pass around data was painful. I wanted to pass around a list of files to run on – and then from that I needed to pass around histograms. I wanted fine grained tasks that would manipulate histograms (dividing the plots) and the same time other tasks would be manipulating whole files (making the plots). Ugh! It was a lot of work just to do something simple! The second thing that killed it was that this particular tool – at the time – didn’t have sub-jobs. You couldn’t build a workflow, and then use it in other workflows. It was my fault that I missed that fact when I was choosing the tool.

So, I moved onto a second attempt. Since my biggest problem had been hooking everything up I decided to write my own. Instead of a GUI interface, I had an XML interface. And I did what is known as “coding-by-convention.” The idea is that I’d set a number of defaults into the design so that it “just worked” as long as the individual components obeyed the conventions. Since this was my own private framework there was no worry that this wouldn’t happen. The framework knew how to automatically combine similar histograms, for example, or if it was presented with multiple input datasets it knew how to combine those as well – something that would have required a another step in the Trident solution.

This solution went much better – I was able to do more than just do my demo – I tried moving beyond the reweighting example above and tried to do something more complex. And here is where, I think, I hit on the real reason that workflow doesn’t work for analysis (or at least for me): you are having to switch between various environments too often. The framework was written in XML. If I wanted a new task, then I had to write C++, or C# (depending). Then there was the code that ran the framework – I’d have to upgrade that periodically.

Really, all I wanted to do was make a stupid plot on two datasets, divide it, and then make a third plot using the first as a weight. Why did I need different languages and files to do that – why couldn’t I write that in a few lines??

Those of you who are active in this biz, of course, know the answer: two different environments. One set of code deals with looping over, possibly, terrabytes of data. That is the loop that makes the plot. Then you need some procedural code to do the histogram division. When that is done, you need another loop of code to do the final plots and reweighting. Take a step back. That is a lot of support code that I have to write! Loading up the MC and data files, running the loop over them, saving the resulting histogram. The number of lines I actually need to create the plot and put the data into the plot? Probably about 2 line or 3. The number of lines I need to actually run that job start to finished and make that plot? Closer to 150 or so, and in several files, some compiled and some interpreted. Too much ceremony for that one or two lines of code: 150 lines of boilerplate for 3 or so lines of the physics interesting code.

So, I needed something better. More on that next week.

BTW, the best visual analysis workflow I’ve seen (but not used) is something called VISPA. Had I known about it when I started the above project I would have gone to it first – it is cross platform, has batch manager, etc., integrated in, etc. (a fairly extensive list). Looking in retrospect it looks like it could support most of what I need to do. I say this only having done a quick scan of its documentation pages. I suspect I would have run into the same problem: having to move between different environments to code up something “simple”.

Reinventing the wheel September 10, 2011

Posted by gordonwatts in Analysis, computers, LINQToTTree, ROOT.add a comment

Last October (2010) my term came to and end running the ATLAS flavor-tagging group. It was time to get back to being a plot-making member of ATLAS. I don’t know how most people feel when they run a large group like this, but I start to feel separated from actually doing physics. You know a lot more about the physics, and your input affects a lot of people, but you are actually doing very little yourself.

But I had a problem. By the time I stepped down in order to even show a plot in ATLAS you had to apply multiple corrections: the z distribution of the vertex was incorrect, the transverse momentum spectrum of the jets in the Monte Carlo didn’t match, etc. Each of these corrections had to first be derived, and then applied before someone would believe your plot.

To make your one really great plot then, lets look at what you have to do:

- Run over the data to get the distributions of each thing you will be reweighting (jet pT, vertex z position, etc.).

- Run over the Monte Carlo samples to get the same thing

- Calculate the reweighting factors

- Apply the reweighting factors

- Make the plot you’d like to make.

If you are lucky then the various items you need to reweight are not correlated – so you can just run the one job on the Data and the one job on the Monte Carlo in steps one and two. Otherwise you’ll have to run multiple times. These jobs are either batch jobs that run on the GRID, or a local ROOT job you run on PROOF or something similar. The results of these jobs are typically small ROOT files.

In step three you have to author a small script that will extract the results from the two jobs in steps 1 and 2, and create the reweighting function. This is often no more difficult that dividing one histogram by another. One can do this at the start of the plotting job (the job you create for steps 4 and 5) or do ti at the command line and save the result in another ROOT file that serves as one of the inputs to the next step.

Steps 4 and 5 can normally be combined into one job. Take the results of step 3 and apply it as a weight to each event, and then plot whatever your variable of interest is, as a function of that weight. Save the result to another ROOT file and you are done!!

Whew!

I don’t know about you, but this looked scary to me. I had several big issues with this. First, the LHC has been running gang-busters. This means having to constantly re-run all these steps. I’d better not be doing it by hand, especially as things get more complex, because I’m going to forget a step, or accidentally reuse an old result. Next, I was going back to be teaching a pretty difficult course – which means I was going to be distracted. So whatever I did was going to have to be able to survive me not looking at it for a week and then coming back to it… and me still being able to understand what I did! Mostly, the way I normally approach something like the above was going to lead to a mess of scripts and programs, etc., all floating around.

It took me three tries to come up with something that seems to work. It has some difficulties, and isn’t perfect in a number of respects, but it feels a lot better than what I’ve had to do in the past. Next post I’ll talk about my two failed attempts (it will be a week, but I promise it will be there!). After that I’ll discuss my 2011 Christmas project which lead to what I’m using this year.

I’m curious – what do others do to solve this? Mess of scripts and programs? Some sort of work flow? Makefiles?? What?? What I’ve outlined above doesn’t seem scalable!